Mining Jobmine - Fall 2010 - Part 3

This is the third part in a multi-part series, read the first part here and the second part here.

JobMine is the tool we use at the University of Waterloo for our co-op. For each job in JobMine there is a description of the job written by the employer. In this article I’ll dig into the job descriptions applying some of the techniques I talked about in the earlier articles.

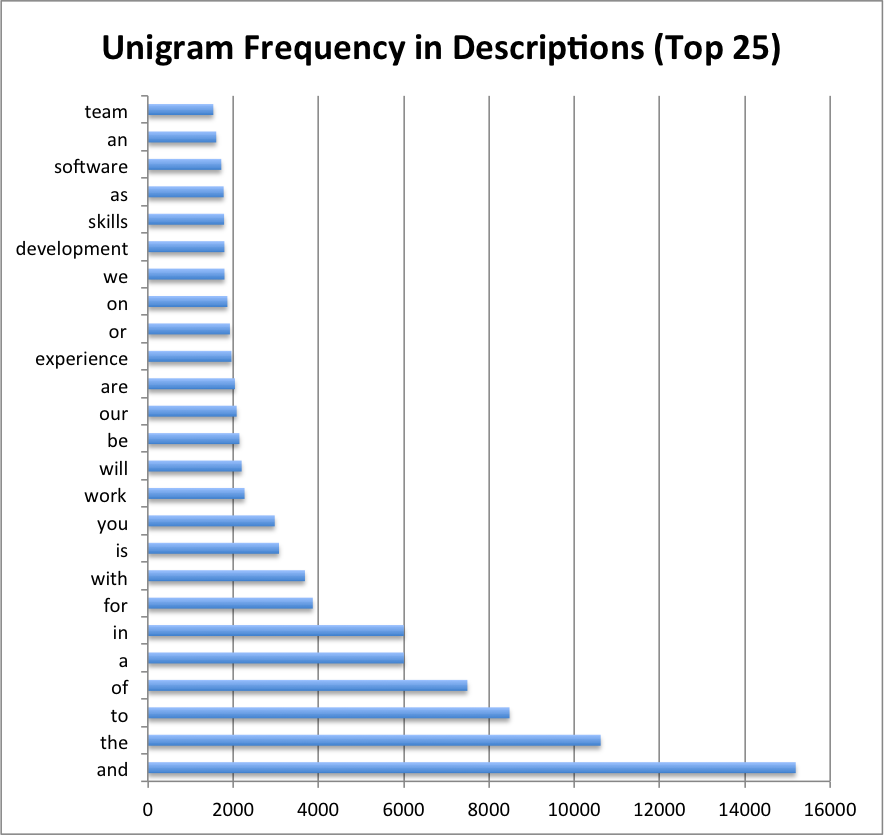

##Unigrams## Just like I did in the first article, I tokenized descriptions and collected the most common ones.

Click on the image for a bigger version

Ugh, that’s not very useful at all! The most common tokens seem to be those that are most common in English in general. Thankfully, we have lists of such common words. They’re called stop words.

##Stop Words## Stop words are common words that don’t really give any insight into the text, like ‘the’, ‘and’, ‘or’ or ‘but’. Often people trying to classify text or extract meaning remove stop words from the text. The idea is that removing the stop words reduces noise in the data and compacts feature space.

I looked into the effectiveness of stop words in my Twitter Sentiment Extraction project.

Now I need a list of stop words to remove. Since I’m a fan of the NLTK (Natural Language Toolkit) for python, I’m going to use the built in stop word list.

###NLTK Stop Words### I’ve reproduced the list here so you can get an idea of what will be removed. Again, please checkout the NLTK website for more information.

i their doing above each

me theirs a below few

my themselves an to more

myself what the from most

we which and up other

our who but down some

ours whom if in such

ourselves this or out no

you that because on nor

your these as off not

yours those until over only

yourself am while under own

yourselves is of again same

he are at further so

him was by then than

his were for once too

himself be with here very

she been about there s

her being against when t

hers have between where can

herself has into why will

it had through how just

its having during all don

itself do before any should

they does after both now

them did

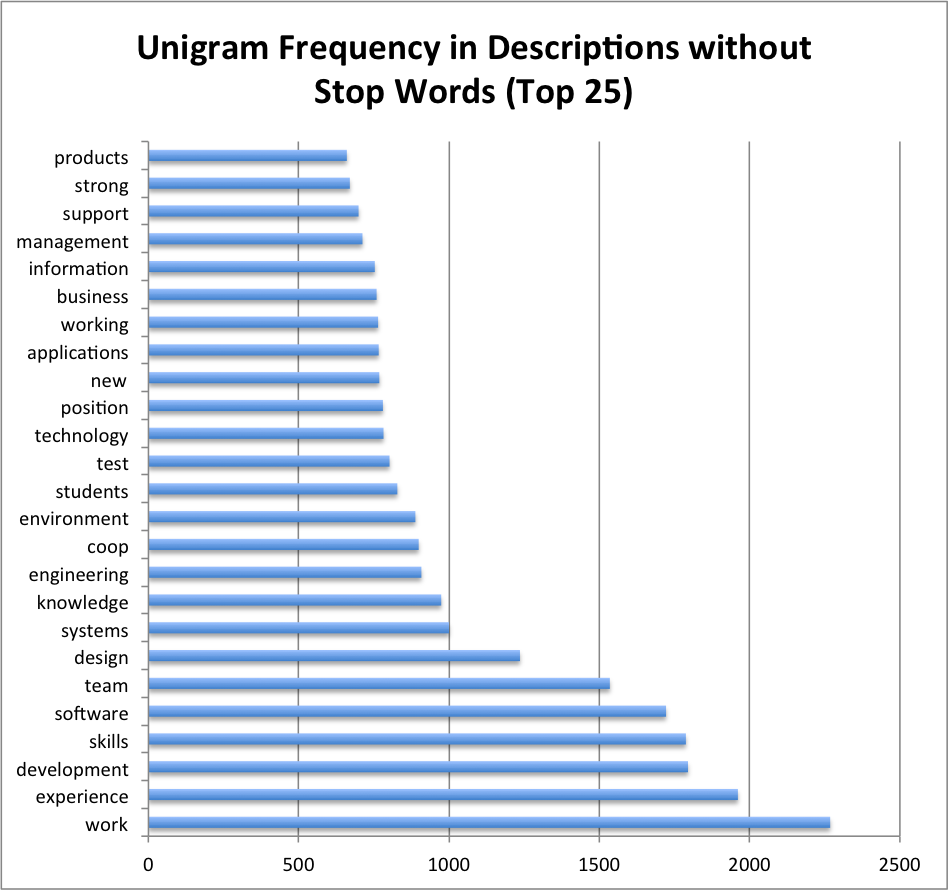

##Unigrams - Stop Words Removed## So I removed all tokens that were in the stop word list inside my tokenizer function.

Click on the image for a bigger version

Ah, now that’s better. Removing those stop words really seems to help. I did not expect for ‘work’ to be the most common token in the job descriptions. ‘Experience’ and ‘development’ were not surprises.

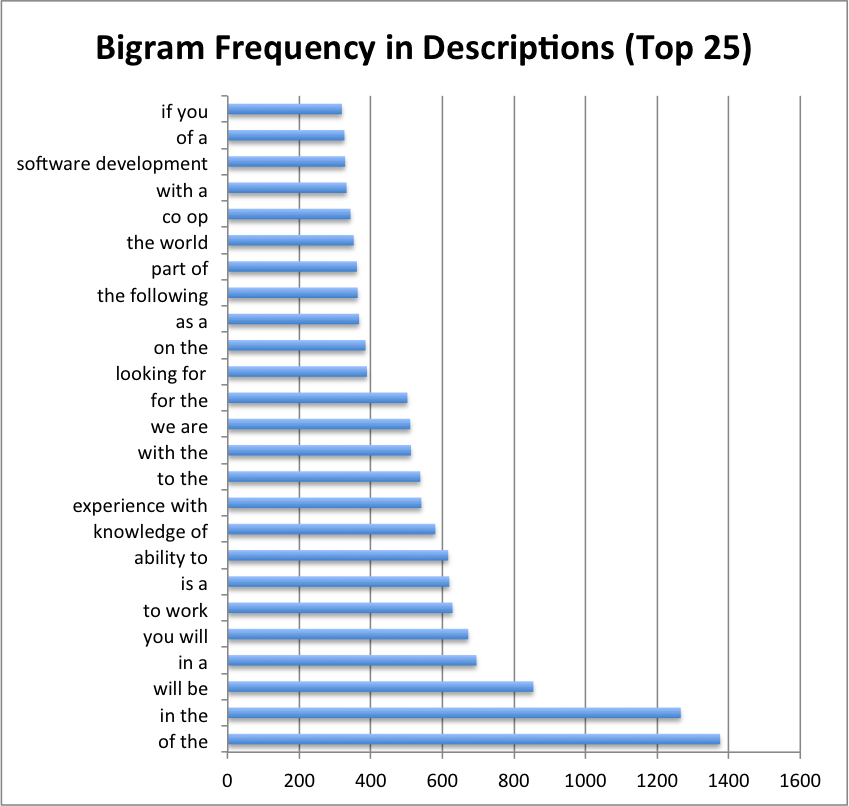

##Bigrams## That covers what I talked about in the first article. Now I’m going to address the topics covered in the the second article, bigrams and trigrams.

Without further ado, here are the most common bigrams:

Click on the image for a bigger version

Hmm, same problem as before. While some of the bigrams may be interesting (‘experience with’ or ‘knowledge of’), I think there’s some more interesting bigrams that are hidden in the noise.

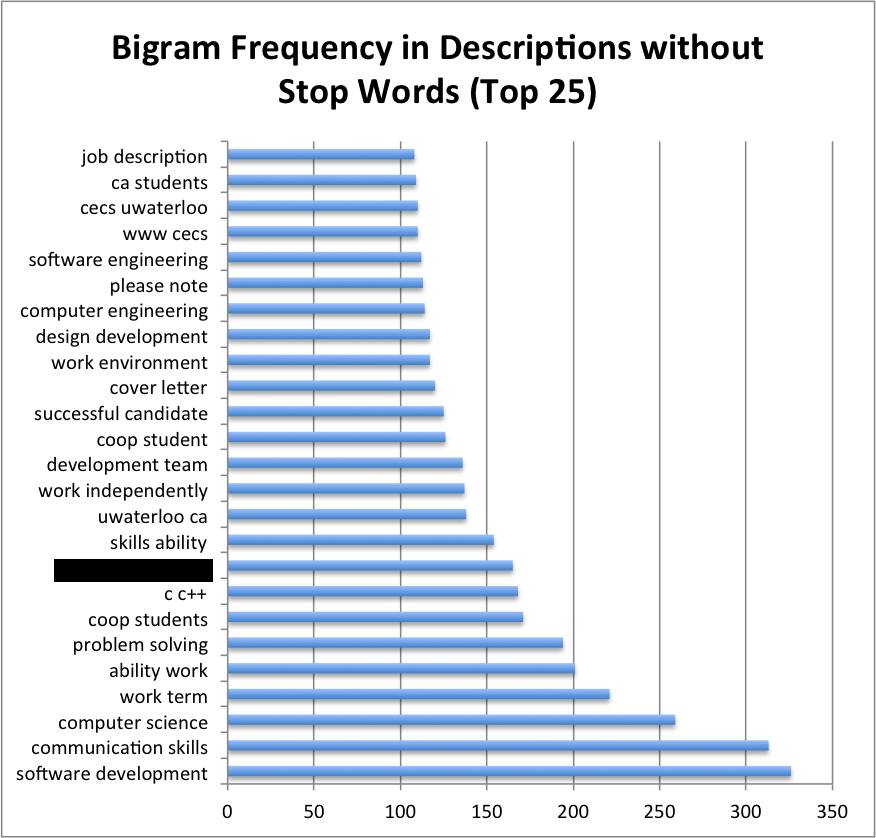

Click on the image for a bigger version

The bigrams that don’t contain stop words seem to be much more interesting. Seems like stop words are working.

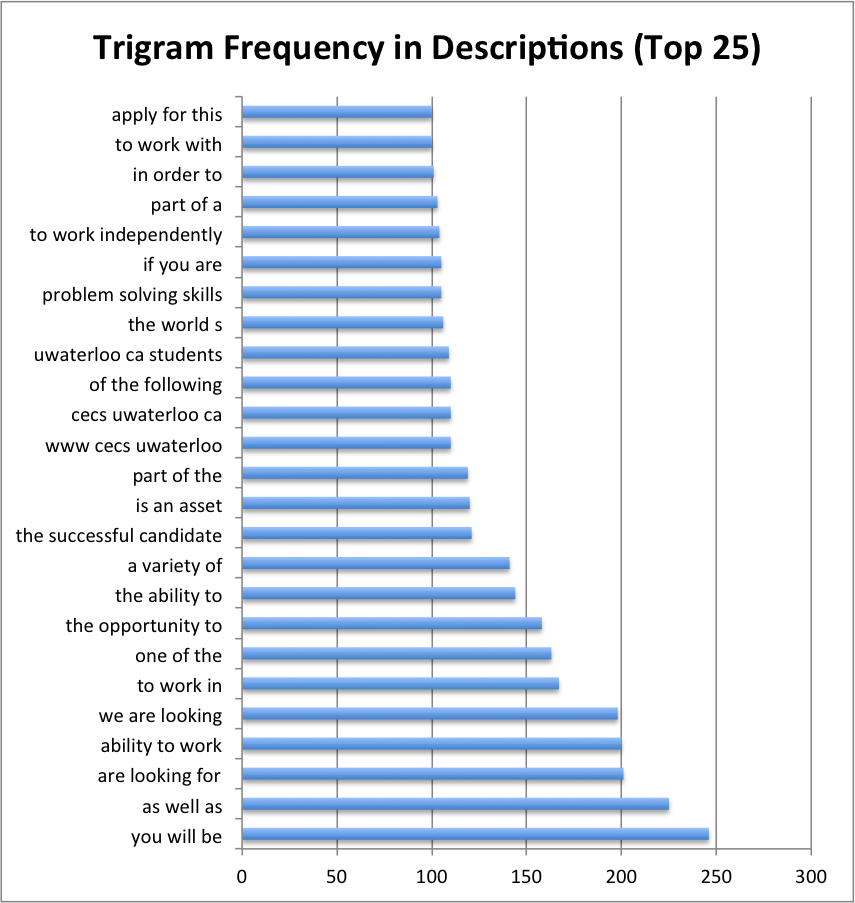

##Trigrams## Previously, trigrams were not that interesting in the titles. I hypothesized that this was because the titles are so short, usually two to three words (I should run some stats on that…). Descriptions are often much longer so maybe they will be more interesting.

Click on the image for a bigger version

Now let’s try removing stop words…

Click on the image for a bigger version

There’s some weird stuff near the top of the range in the trigrams. The people who run JobMine (CECS) put a notice in the job descriptions of positions outside of Canada. It’s good to let people know about the possible issues with working internationally but it seems to mess with the data. We are really interested in what employers write in the their job descriptions. So I’ll try to remove them.

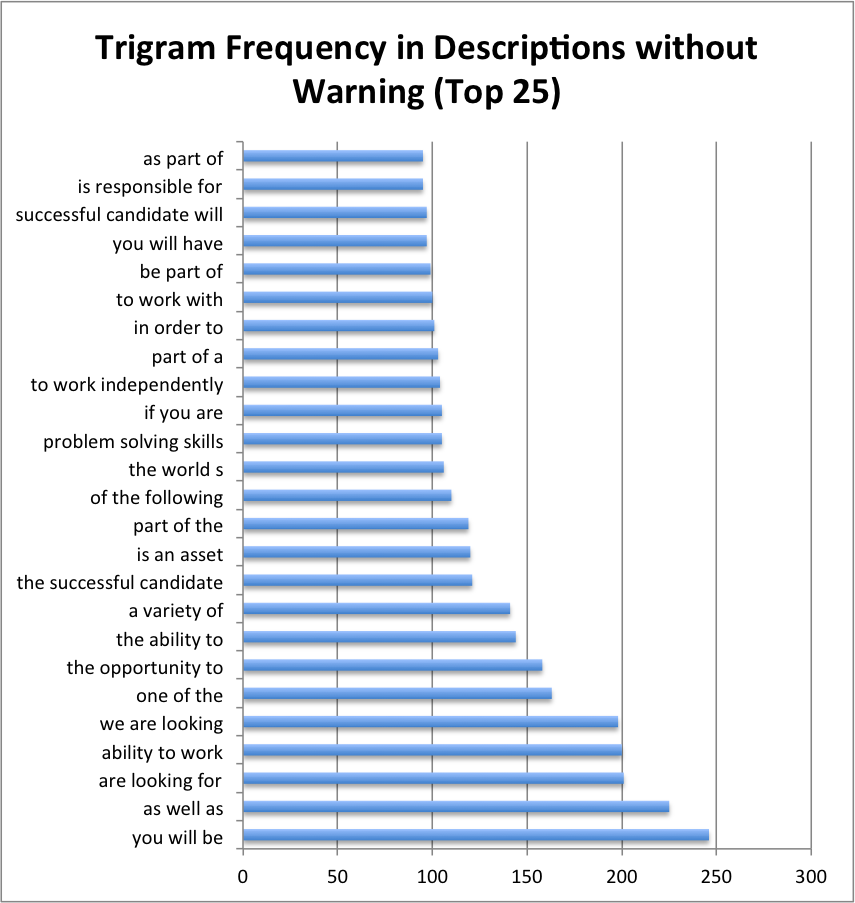

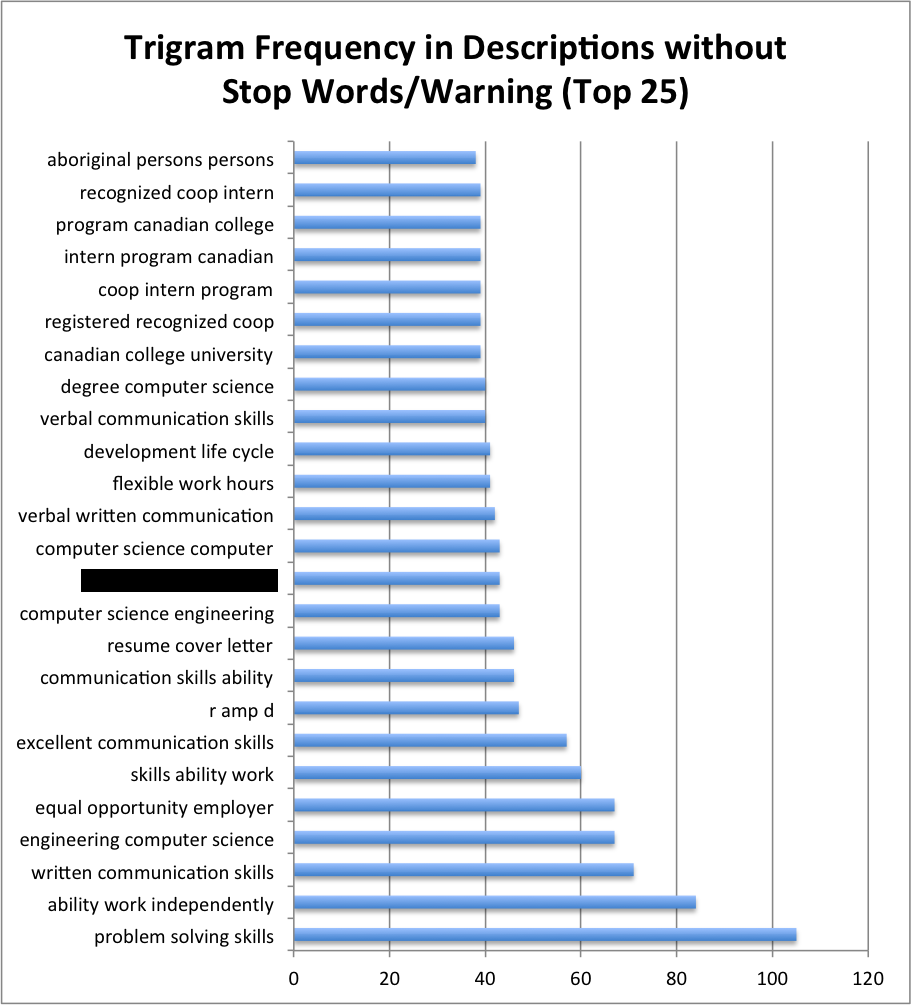

##Trigrams without Warning## So I modified my script to remove the CECS warning from the job descriptions and plotted both charts. (I really should have wrote my script to produce a chart at the end instead of sending the results to Excel)

Click on the image for a bigger version

Now let’s try removing stop words…

Click on the image for a bigger version

I’ve censored one of the trigrams as it would have given away one of the companies on JobMine and I don’t want to get in trouble with the university. In the future I’m going to try and play with public data sets so I don’t have this issue.

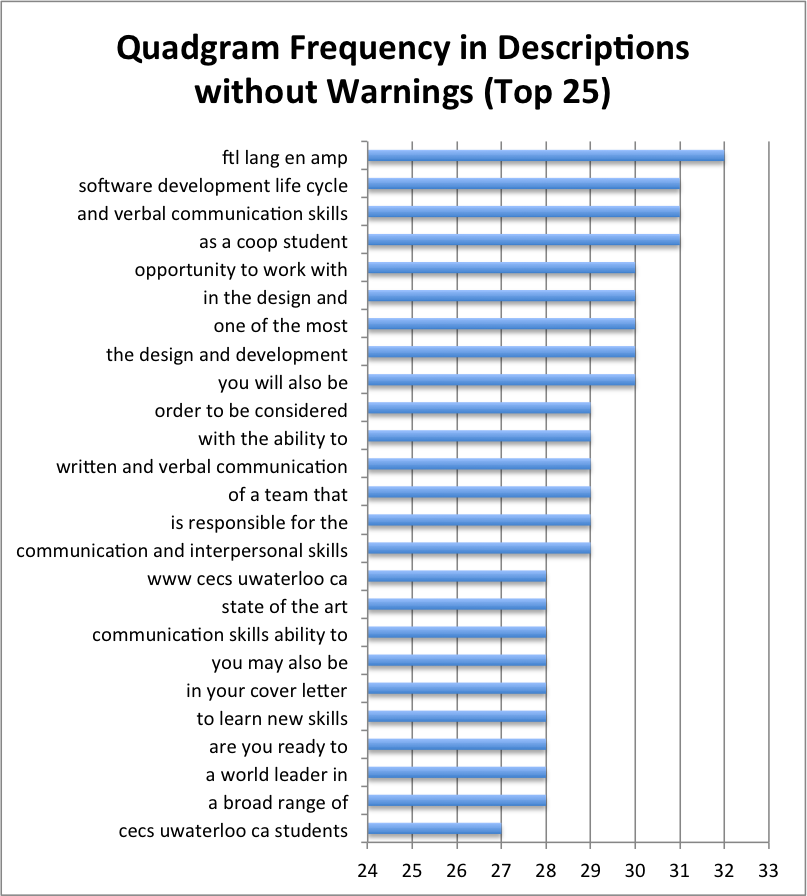

##Quadgrams## Trigrams seem to be interesting, how about setting n to 4 (quadgrams?). Quick changes in the script and away we go…

Click on the image for a bigger version

Now let’s try removing stop words…

Click on the image for a bigger version

As you can see, the larger the value of n, the less frequent the top grams are. This makes sense as the chance that multiple employers will choose the exact same sequence of n words in a job descriptions decreases with higher n. This is kind of like the randomly typing monkeys problem. However, I wouldn’t want to compare the employers to randomly typing monkeys…

##Next Time## Looking at the descriptions seemed interesting to me but I’m definitely not done messing with this dataset yet. Some other things I’m thinking of doing next include lengths of job descriptions, writing level (though that could be sticky) and maybe some other stats that step away from the text itself.

Hope you enjoyed this article, shoot me an email if you have something to say.